How can I backup Azure Table Storage?

Hello!

In addtion to my post about bicep parameters (see here), I want to grudge an important additional thing about storing things in a Azure Table Storage. -> Backup!

So today I going to introduce you my solution: How can I backup Azure Table Storage?

The problem

So let's start with the description of the problem to get solved. Actually, if you want to backup your tables data in Azure Storage Accounts, you'll notice, that there is no possibility in Azure to do that natively.

Microsoft gives us a concrete acknowledgment about that here: https://learn.microsoft.com/en-us/answers/questions/812889/azure-table-storage-recommended-backup-solution

So as I absolutely have the need to backup such important information, I've started to code some stuff.

The solution

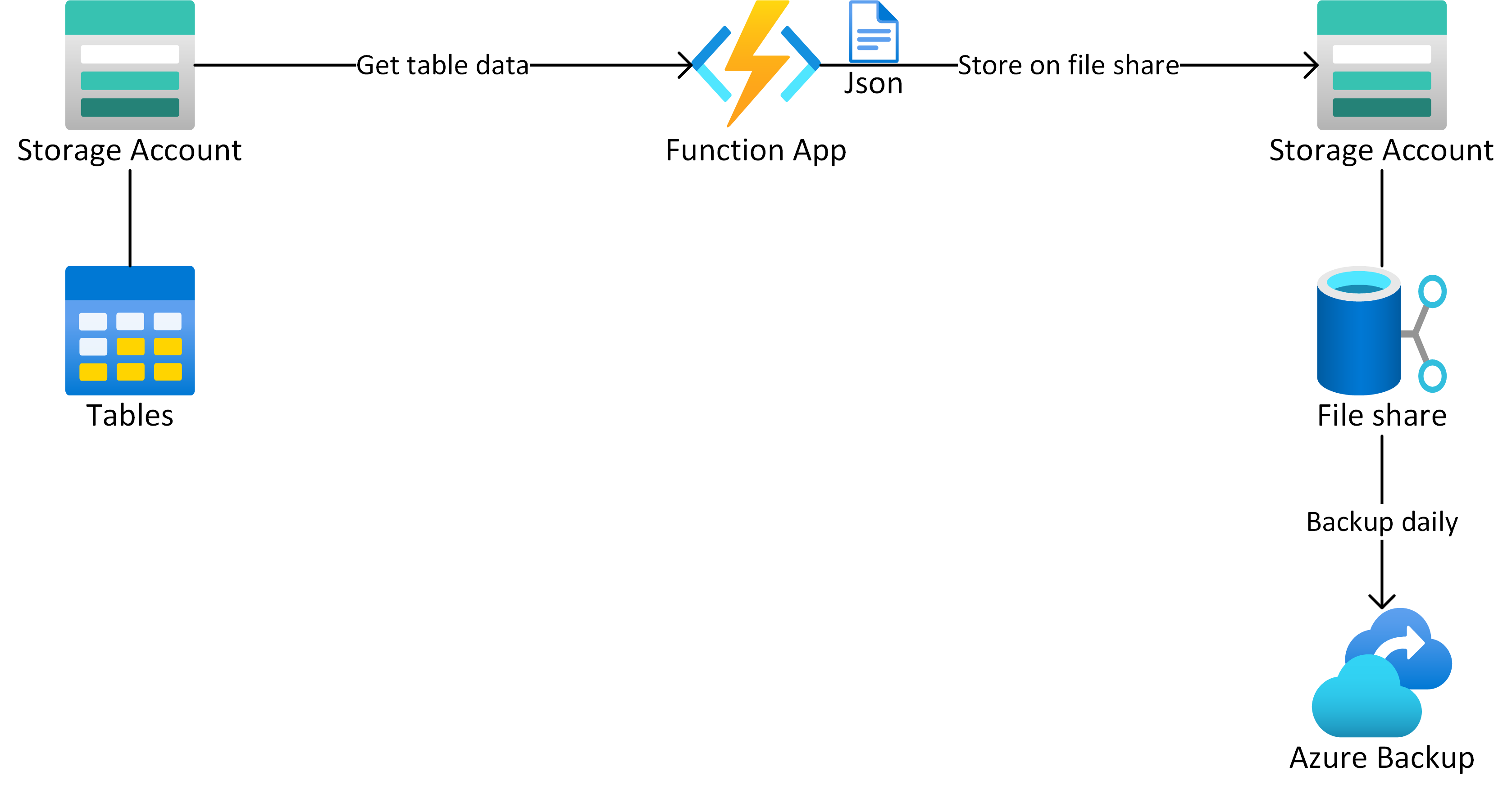

In fact, it's not possible to backup table storage itself, but you'll be able to do it with File Shares.

For this purpose, we're going back to the good old JSON files. A PowerShell function app will call all tables out of the storage accounts and convert their content to JSON files per partition key.

After that, the function app will upload the files to a file share, secured via Azure Backup.

Design Consideration

Of course, I also wanna share my considerations about this:

"Why Functions App and not Azure Automation?"

As our tables store sensitive data about customers, we absolutely reject to let them facing to public networks. This also, logically, regards backup storage accounts.

But there is a problem with Azure Automation: It doesn't support VNet Integration to communicate via a VNet to private storage accounts.

So we've decided, that this isn't an option for us.

"Functions plan"

As in the further point mentioned, we need to implement a private connection between the storage account and the function app.

VNet Integration is not available on Function apps on Basic plans. So we'll need to go a little higher.

We decided to use the first Dynamic Elastic tier "EP1" for our infrastructure, which I also would recommend for all of you with normal table sizes.

How to use?

Preparation

First, you need to create a new storage account where you send the data for backup.

I would recommend ZRS to provide replication between Azure Datacenter Regions Datacenter.

It's planned to expand the functionality to blob storage, so you can also use GRS. Azure Backup for Blob storages is in public preview for now, what is the reason why we use Azure Files here for production workloads.

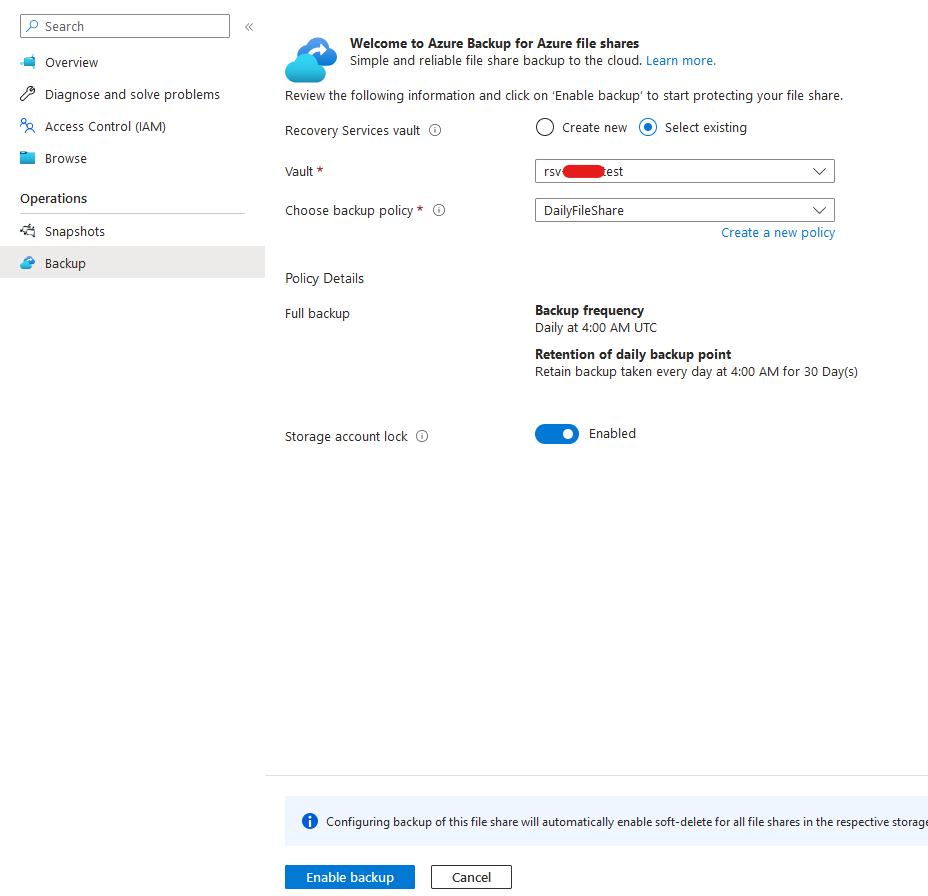

Second, you'll need a Recovery Services Vault, which you can create at your own discretion.

I recommend, based on workload and sensitivity, a daily backup policy with the follwing retention settings:

- Daily backup: 30 days

- Monthly backup: 6-12 months

Of course, you can go more frequently, but consider it, when configuring the Function App schedule.

Deploy Function App

Visit my Repo on Github

There you can find mainly two folders with the actual FunctionApp and a Bicep Template for deploying it in your tenant.

The easiest is to use the big blue Deploy to Azure Button here :)

Fill in the following parameters:

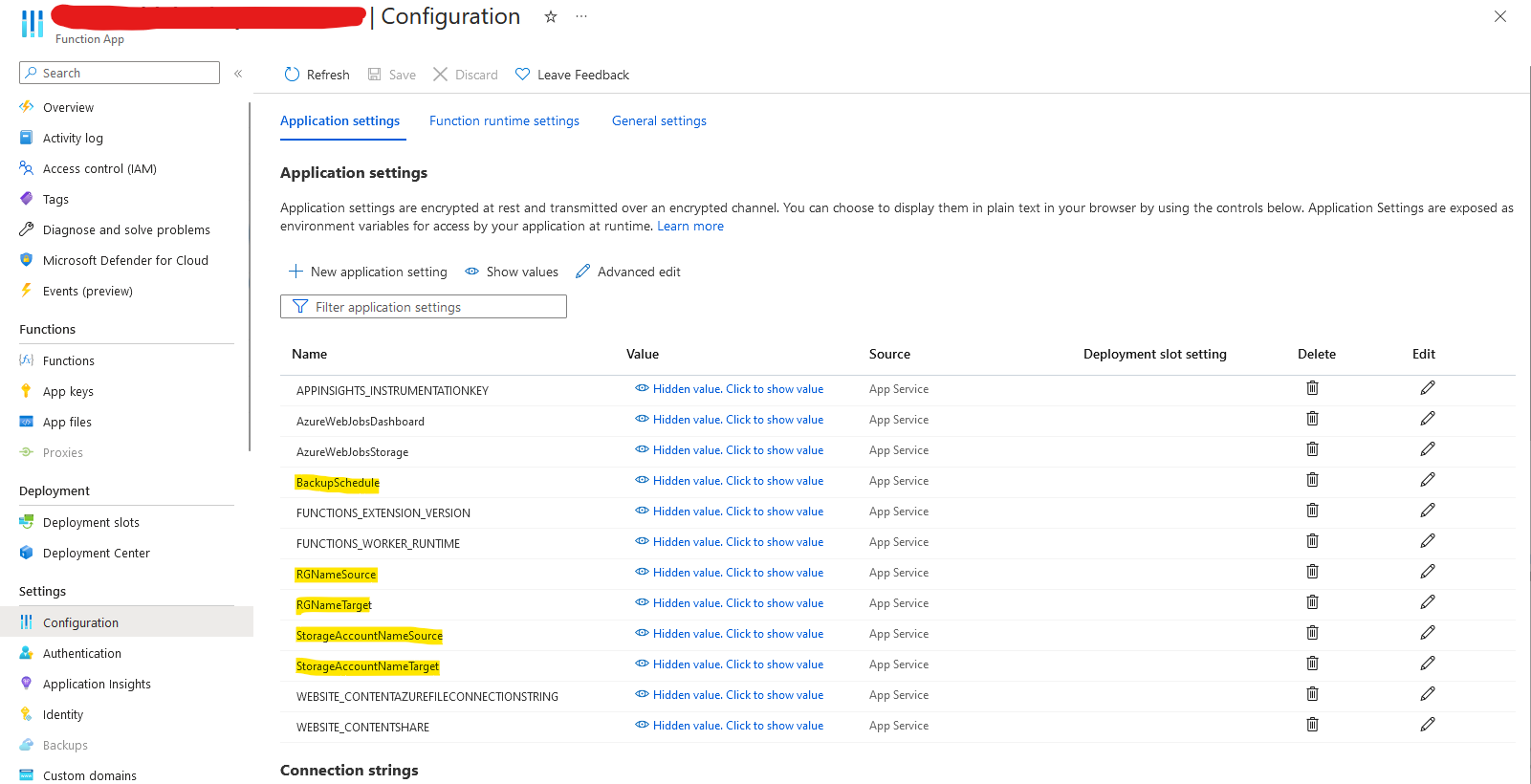

- AppName -> The desired functions name

- RG Name Source -> Name of the source storage accounts resource group

- RG Name Target -> Name of the target storage accounts resource group

- Storage Account Name Source -> Name of the source storage account

- Storage Account Name Target -> Name of the target storage account

For the parameter "Backup Schedule" you'll need to put in a NCronTab expression.

{second} {minute} {hour} {day} {month} {day-of-week}

The default value is 0 0 3 * * * which simply means "Execute this task every day at 03:00 AM"

You can get further information about here

After hitting the Create button, your functions app will be in place and configured for backing up your table storage. Hurray!

Configuration

The Function App comes with a system-assigned identity, which is used to authenticate with Azure Storage Accounts.

So to give the function access to your accounts, you'll need to assign the system-assigned identity the following RBAC Roles:

Scope: Source Storage Account

Role: Storage Table Data Reader

Scope: Target Storage Account

Role: Storage File Data Contributor

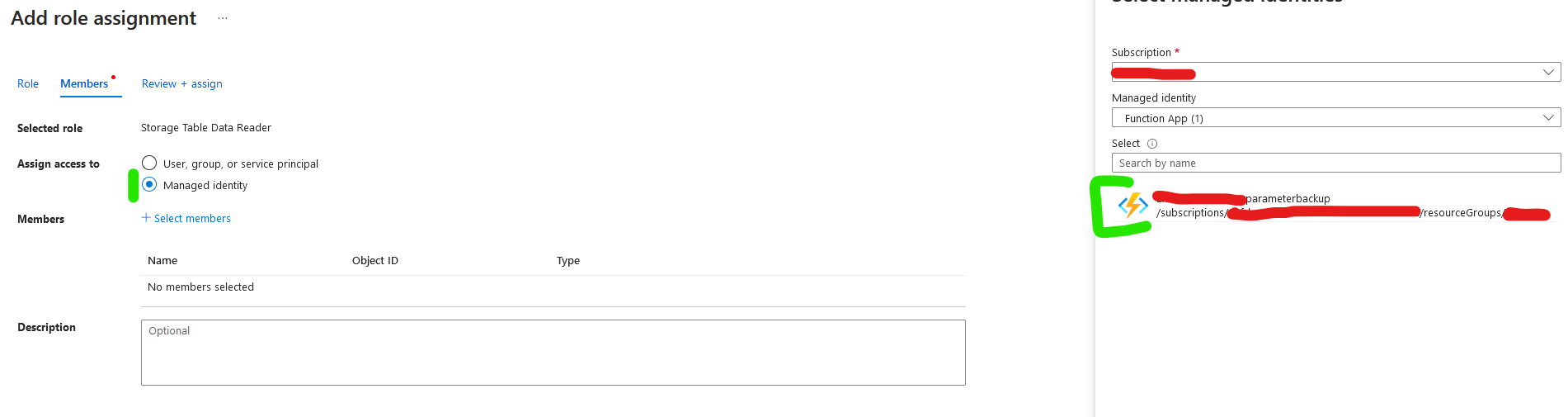

Be sure you choose the managed identity checkbox while creating the role assignment:

After this, it's time to give your function app the first run!

First Run

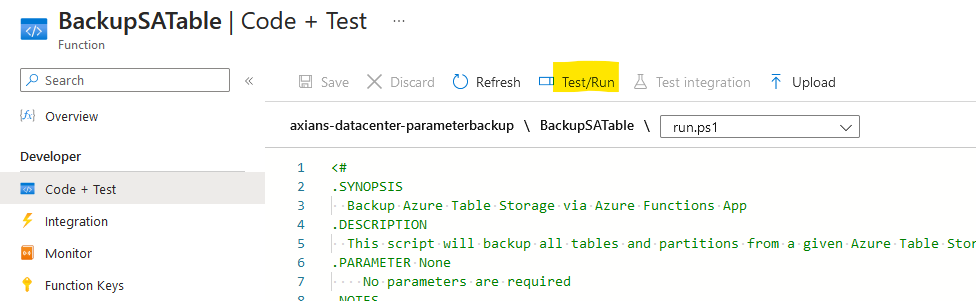

Go to your Functions App and open your Backup Function.

Function App -> Functions -> BackupTable -> Code+Test

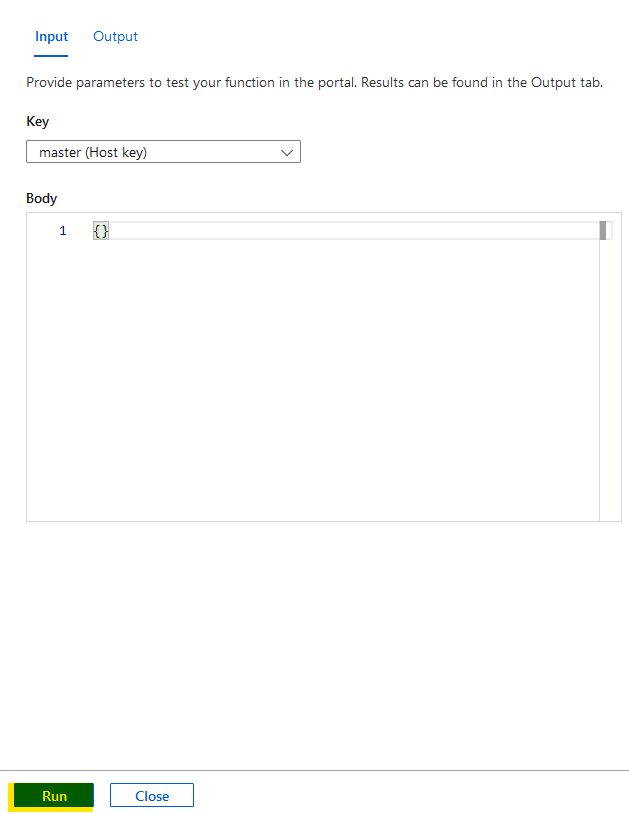

In there, hit the Test/Run Button and start execution. It may take a while after you hit the execution button.

In the Logs section, you can monitor the execution progress.

The first execution may take a while, as it is downloading all the required packages.

Activating Azure Backup

After the first run, your target storage account should include now a file share named "backup" + your tables name.

In the file share, you'll find a folder for each of your tables including their Partitions as JSON-Files.

Using the recovery services vault, which we created at the beginning, you may now configure Azure Backup for this file share.

Change Parameters of Function

In case you need to change settings, which you've defined during deployment, you can do so in the configuration settings of your Function App:

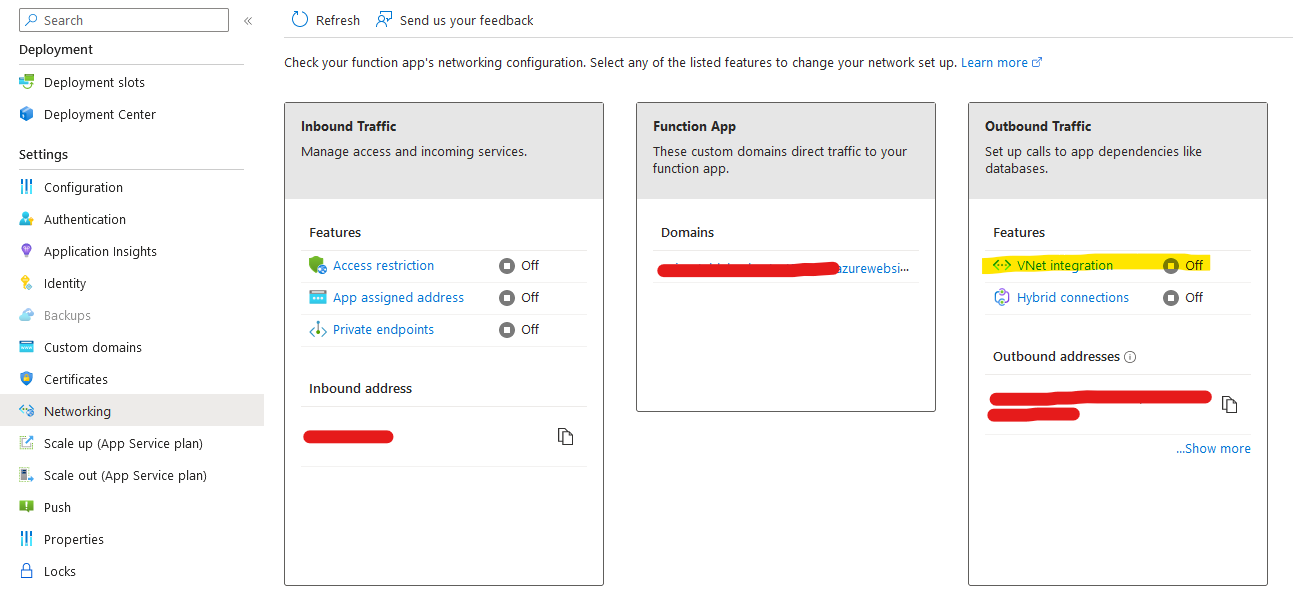

VNET Integration

In order to provide secure communication between the productive and the backup storage account, you'll need to activate VNet Integration on the function app.

For this, go to the networking tab and activate VNet Integration:

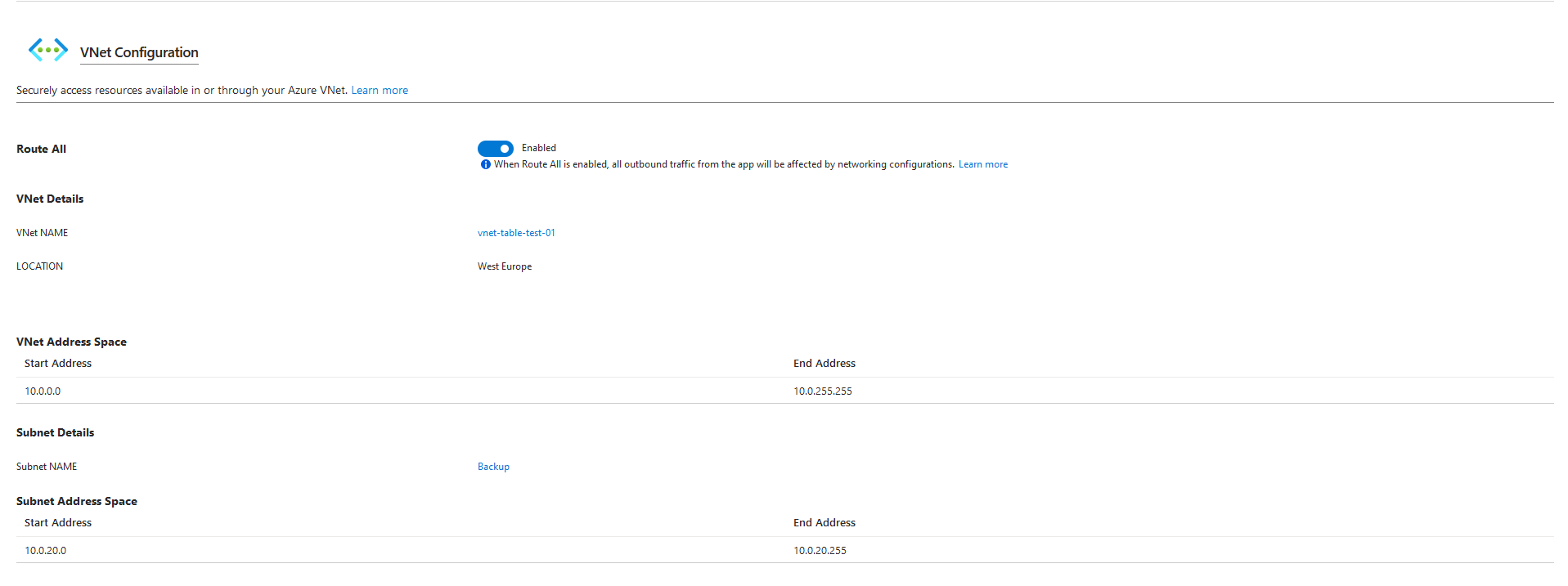

I've created a separate Subnet in my Storage Accounts VNet, where I also could integrate Azure Backup with a private endpoint if I want to do so.

If you're done with this task, you can restrict the backup storage account to this explicit VNet, so nobody else can access your account:

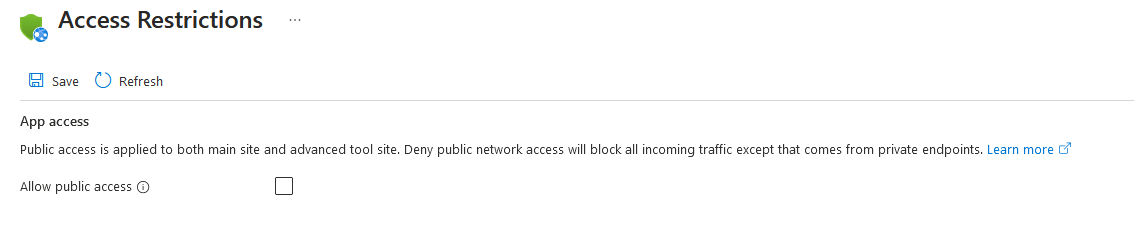

In the access restriction properties, it's recommended to deactivate public access to the function app, as the app is triggered by a timer, and no external access is required.

Mind that if you want to test/execute the function in the portal, you'll need to reenable public access and allow your IP Address at least.

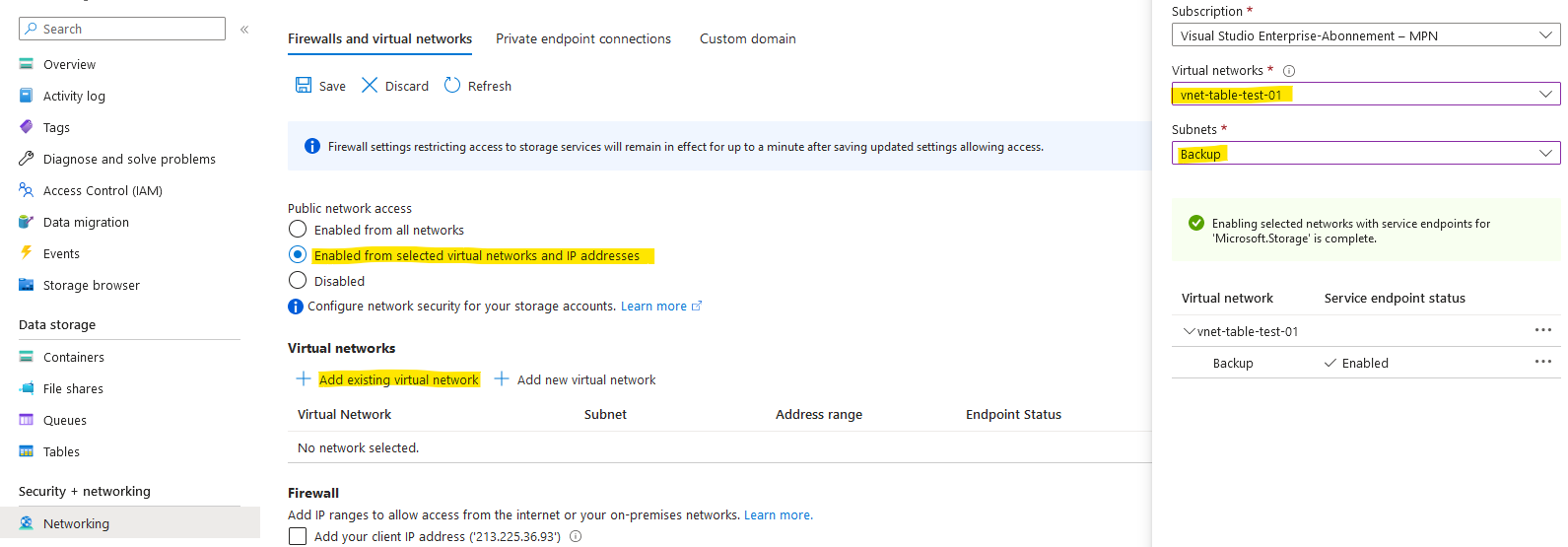

Thereafter, you may restrict access to your backup storage account. To do this, go to your storage account and choose the networking tab.

Then choose "Enabled from selected virtual networks and IP addresses" and select your Backup subnet in the Virtual networks section.

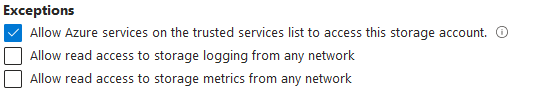

In addition, make sure you are enabling Microsoft trusted services as an Exception below.

Of course, this doesn't apply if you enable a private endpoint on your Recovery Services Vault.

Conclusio

So now we've implemented a backup solution for a table storage, with secure connectivity between the actors.

You'll be able to restore each Partition Key by downloading the related JSON-File and putting it to your table via PowerShell! For specific Row Keys, just remove the other ones from your JSON-Files.

When Azure Blob Storage backup becomes GA, I'll update the function and give it an update here for you.

Let me know, your contributions in the comment section below!

Cheers! :)